The Google Translate API Furor: Analysis of the Impact on the Professional Translation Industry – Part I

This is a post further exploring the Google API announcements by guest writer Dion Wiggins, CEO of Asia Online (dion.wiggins@asiaonline.net) and former Gartner Vice President and Research Director. The opinions and analysis are those of the author alone.

Overview

This is Part II of the posting that was posted on June 1, 2011. Part I detailed the reasons behind the Google announcement that it will shut down access to the Google Translate API completely on December 1, 2011, and reduce capacity prior to the shutdown. Part II which will be released as two posts, analyzes the impact that the announcement will have on the professional language services industry and also explores the implications of Google charging for it's MT services.

Summary

Humans will be involved in delivering quality language translation for the foreseeable future. The ability to understand context, language and nuance is beyond the capabilities of any machine today. If machine translation ever becomes perfect, then it truly will be artificially intelligent. But there are many roles for machine translation in the professional language services industry today, despite the limitations of the technology in comparison to human capability.

With a combination of machine translation technology with human editors, a quality level of translation output that is the same as a human only approach can be delivered in a fraction of the time and cost. The perception that machine translation is not good enough and it is easier to translate by human from the outset is outdated. It is time to put that idea to rest, since there are now many examples that clearly prove the validity of using machine translation with human editing to deliver high quality results.

The old adage of “there is no such thing as a free lunch” can be adapted to “there is no such thing as free translation” – you get what you pay for. The professional language service industry needs more than a generalized translation tool – control, protection, quality, security, proprietary rights and management are necessities.

· Google’s decision to move to a payment model for its Translate API is not a trivial one. It is part of a long term strategic initiative that is the right thing to do for Google’s business. Google’s primary rationale is to address issues relating to control of how and when translation is used and by whom, which in turn addresses the problem of “polluted drinking water” and will help clean up some of the lower quality content that Google has been criticized for ranking highly in its search results. This is a key strategic decision that will be part of their core business for the next decade and beyond.

· The professional language services industry (or Language Service Providers – LSPs) will not be negatively or positively impacted by Google moving to a paid translation model. True professionals do not use free or out-of-the-box translation solutions. Google’s business model does not fit well with LSPs and does not deliver the services which make LSPs professional. LSPs are not a market or customer demographic of significance to Google. Google’s customers are primarily Advertisers, not content providers or other peripheral industries. While these tools may give an initial impression that Google is serious about the language industry, the tools are in reality a thinly veiled cover over a professional crowd-sourcing initiative that delivers data and knowledge to Google under license that can then be used by Google to achieve greater advertising revenue and market share.

· Google Translate is a one-size-fits-all approach designed to give a basic understanding (or ‘gist’) of a document. This is insufficient in meeting the needs of the professional language services industry. What the industry needs are customized translation engines based around clean data, focused on a client’s specific audience, vocabulary, terminology, writing style and domain knowledge because this results in a document that is translated with the goal of publication and with reader satisfaction in mind.

· Google is not in the business of constructing data sets based on individual customer requirements or fine tuning to meet a customer’s specific need. The model of individual domain customizations is not economical for Google and, due to the human element required to deliver high-quality translation engines, this model does not scale even remotely close to other Google service offerings or revenue opportunities.

· Where enterprises have a real need for translation and a desire to use technology to help, expect some to try experimenting with open source. A small number of enterprises will succeed if they have sufficient linguistic skills, technical capability and data resources. Most will not. Others will try commercial machine translation technology. Out-of-the-box solutions will be insufficient, but those who invest the time and energy with commercial translation technology providers and LSPs to deliver higher quality output that is targeted for specific audiences and domain will be more likely to be successful in their machine translation efforts.

· Enterprises considering machine translation should ensure that machine translation providers and/or LSPs that they are working with will protect their data. Contracts should allow for the use of a customer’s proprietary data with third parties in order to deliver lower cost and faster services, but should ensure that the data is protected and not used for any other purpose other than service delivery. It may be wise to ensure a legal sign off process from within your own organization before any third party service is used.

· Like Google, enterprises should protect how, when and by whom their data and knowledge are used. Translations, knowledge, content and ideas are all data that Google gains advantage from and leverages from third party and user efforts. Google does this legally by having users grant them an almost totally non-restrictive license. As Google states in its own highlights of its Terms of Service “Don’t say you weren’t warned.”

Detailed Analysis

And Then Came The Worst Kept Secret in the Translation Industry… Google Wants To Charge!

Somewhat predictably, Google has changed its public position and is now going to charge for access to the Translate API. Announcing the shutdown may have been nothing more than a marketing ploy as there are clear indications that Google was intending to charge all along.

On June 3, Google’s APIs Product Manager, Adam Feldman announced the following with a small edit to the top of their original blog post:

“In the days since we announced the deprecation of the Translate API, we’ve seen the passion and interest expressed by so many of you, through comments here (believe me, we read every one of them) and elsewhere. I’m happy to share that we’re working hard to address your concerns, and will be releasing an updated plan to offer a paid version of the Translate API. Please stay tuned; we’ll post a full update as soon as possible.”

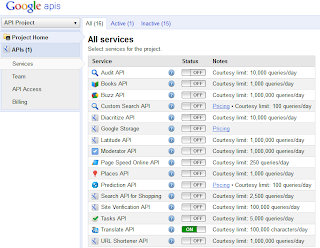

The reason the announcement came as no surprise is very simple – Google already has paid models for the Custom Search API, Google Storage API and the Prediction API via the API Console (https://code.google.com/apis/console/).

Google Translate API V2 has been listed in the API Console for a number of months already and offers 100,000 characters (approximately 15,000 words) per day limit. There is also a limit of 100.0 characters per second. While there is a link for requesting a higher quota, clicking on the link currently presents the following information:

Google Translation API Quota Request The Google Translate API has been officially deprecated as of May 26, 2011. We are not currently able to offer additional quota. If you would like to tell us about your proposed usage of the API, we may be able to take it into account in future development (though we cannot respond to each request individually). In the mean time, for website translations, we encourage you to use the Google Translate Element. |

For those who choose to respond, be prepared to reveal potentially sensitive information to Google. The form presented asks for a company profile, number of employees, expected translation volume per week and a field to tell Google how you intend to use the Translate API. I do not believe that Google offers any real privacy guarantees on much of the data it collects, and is in essence crowd-sourcing for interesting innovations and use of its own API. The Terms of Service at the bottom of the page include a very interesting clause:

By submitting, posting or displaying the content you give Google a perpetual, irrevocable, worldwide, royalty-free, and non-exclusive license to reproduce, adapt, modify, translate, publish, publicly perform, publicly display and distribute any Content which you submit, post or display on or through, the Services.

So quite simply – be careful. You are giving Google your ideas and at the same time granting them the right to do pretty much anything they wish with it. One could argue, as others have done in the past, that this type of broad legal permission is required by an operator such as Google in order to operate its network in a reasonable manner. Even if that is so, it does not give LSPs any comfort with respect to their confidential client data.

Payment for services managed by the API console is via Google Checkout and all Google needs to do now is publicly set a price for a specified number of characters and turn the billing function on in the API console. Meanwhile they have had a number of “developers” testing the API (for free) and ironing out any issues since the launch of the Translate API V2.

Given that the Translate API V2 is already tested and in use, billing and quota management features are already available in the API console, and the API console allows for business registration and authentication, it would appear that Google’s initial announcement that it was shutting down the Translate API was little more than a marketing stunt designed to bring attention to the Translate API ahead of the change to a fee based model.

What Does Google Achieve By Charging and Managing the Google Translate API by the API Console Control: Google can now control who can and cannot use the API in addition to how much the API is used and at what speed. This solves nearly all of the abuse problems that were discussed in the prior blog post on this topic. Control will most likely be at a level of an individual or at the level of a company, but not at the level of software products. Products will adapt to allow the user to enter their own key and be billed by Google directly.

When you sign up for the paid Translate API or purchase translation capacity via Google Checkout, you are acknowledging the Google Terms of Service. Google has a much more explicit commitment from you and knows who you are. If the Terms of Service are abused in any way, Google has the means to track the use and take the appropriate legal action. This will most certainly have a significant impact on the “polluted drinking water” problem.

When you sign up for the paid Translate API or purchase translation capacity via Google Checkout, you are acknowledging the Google Terms of Service. Google has a much more explicit commitment from you and knows who you are. If the Terms of Service are abused in any way, Google has the means to track the use and take the appropriate legal action. This will most certainly have a significant impact on the “polluted drinking water” problem.

· Revenue: It does give Google some revenue. However in comparison to other revenue streams such as advertising, this is likely to be insignificant. It is unlikely that Google will offer post-pay options, so users should expect to pay in advance using Google Checkout.

· Blocking Free Services: Developers that would have (or have already) built applications that offer free translation will cease to use the Google Translate API. In reality, these applications offer little value-add to users and this feature is offered by Google in other tools. Free applications that integrate Google Translate within competitors’ products such as Facebook and Apple will cease to exist, giving Google products such as Android a competitive advantage by being the exclusive developer of products that leverage its translation service without a charge to the user. If so, is this potentially anti-competitive?

Google may wish to keep some free third-party applications around in order to give the perception that it is encouraging innovation and to gather ideas for its own use of the Translate API, so it would come as no surprise if Google offers a smaller amount of words for free and possibly even require individual users to log in using a Google User ID in order to not just control the application’s use of the Translate API, but also the individuals who use the application. By requiring individual users of an application to log in, tracking is extended to an individual level and blocking one errant user will not block an entire application.

Google may wish to keep some free third-party applications around in order to give the perception that it is encouraging innovation and to gather ideas for its own use of the Translate API, so it would come as no surprise if Google offers a smaller amount of words for free and possibly even require individual users to log in using a Google User ID in order to not just control the application’s use of the Translate API, but also the individuals who use the application. By requiring individual users of an application to log in, tracking is extended to an individual level and blocking one errant user will not block an entire application.

· Blocking of Abusive Applications: As Google has control over who accesses the API and Google is also charging, it will no longer be economical to mass translate content in an attempt to build up content for Search Engine Optimization (SEO).

Encourage Value Add Applications: Developers that have created a true value-added product (i.e. a translation management platform) where the Google Translate API is a component of the overall offering, but not a main feature, will gain from there being less competitive noise in the market place. Google wants to be seen as empowering innovation. User perception of innovation can be further expanded when Google’s technology is embedded in other innovative products. Commercial products of this nature are often expensive and often used by larger corporations. Customers who use their products may be required to get their own access key unless they have a billing agreement with the service provider. This provides yet another mechanism for Google to create a commercial relationship with enterprisesImpact on the Professional Language Industry

There are many different segments within the professional language industry that are impacted by Google’s decisions about translation technology.

Impact on Machine Translation Providers

Those who offer machine translation free of charge using Google as the back-end will cease to exist unless they are able to generate an alternative revenue stream or other value-added features that users are willing to pay for. Those who offer free translation using other non-Google translation technology will likely see an increase in traffic to their sites as the Google-based providers start to vanish. Google will experience an increase in users going directly to their translation tools instead of via third-party websites.

Anecdotally, Asia Online has seen a considerable increase in inquiries from companies that have a commercial use for machine translation since the Google Translate Shutdown announcement. It is expected that other machine translation providers have seen a similar rise in interest.

There has been some speculation that machine translation providers may increase their prices as a result of the Google announcement. However, this is unlikely. Most offerings are relatively low cost, especially in comparison to large scale human translation costs. Asia Online views the change in Google’s translation strategy as an opportunity to stand above the crowd and demonstrate how customized translation systems can significantly outperform Google in terms of quality.

Impact on Open Source Machine Translation

Open Source machine translation projects will see some additional interest, but implementing these technologies is not at all simple and well beyond the technology maturity of many language industry developers and organizations. There are many open source translation platforms, and they vary in their underlying technique. These include rules-based, example-based and statistical-based machine translation systems. Most of these systems are not intended for real world commercial use, and many open source initiatives are part of ongoing research and development at universities. These are mostly academic development systems and have not been designed nor were they ever intended for commercial projects.

One of the most popular open source machine translation projects is the Moses Decoder, which is a statistical machine translation (SMT) platform that was originated by Asia Online’s Chief Scientist, Philipp Koehn, with continued development from a large number of developers researching natural language programming (NLP), including Hieu Hoang who also recently joined Asia Online.

In addition to the complexity of building an SMT translation platform such as Moses, expertise in linguistics is required to build pre and post-processing modules that are specific to each language pair. But the biggest barrier to building out an SMT platform such as Moses is simply the lack of data. While there are publically available sets of parallel corpora (collections of bilingual sentences translated by humans), such parallel corpora are usually not in the right domain (subject or topic area) and is usually insufficient in both quantity and quality to produce high quality translation engines.

Many companies will try open source machine translation projects, but few will succeed. The effort, linguistic knowledge and data required to build a quality machine translation platform is often underestimated. As an example, many of Asia Online’s translation engines now have tens of millions of bilingual sentences as data to learn from. For more complex languages, statistics alone are not sufficient. Technologies that perform additional syntactic analysis and data restructuring are required. Every language pair combination has unique differences and machine translation systems such as Moses accommodate for very little of the nuances between each unique language pair.

Even Google does not handle some of the most basic nuances for some languages. As an example, if you translate a Thai date that represents the current year of 2011, it will be translated from Thai into English in its original Thai Buddhist calendar form of 2554. (e.g. “Part 2 of Harry Potter and the Deathly Hallows film will be released in July 2554”). For languages like Chinese, Japanese, Korean and Thai, additional technologies are required in order to separate words as there are no spaces between words as there are in romanized languages. In Thai, there are not even markers that indicate the end of a sentence. Most commercial machine translation vendors have not yet invested in the necessary expertise required to process more complex languages. As an example, SDL Language Weaver does not even try to determine the end of a sentence in Thai and simply translates the entire paragraph as if it is one long sentence. If commercial machine translation vendors are so far unable to conquer some of these complex technical tasks, it is not realistic to expect that the experimental ambitions of even sophisticated enterprises will be enough to be successful.

The language industry has been active in building open source technologies that convert various document formats into industry standard formats such as TMX or XLIFF. However, these tools, while improving, still leave much to be desired, and using them often results in format loss. With the demise of LISA, the XLIFF standard is gaining traction faster than ever before, but there still remains much disagreement and incompatibilities with the XLIFF development community that are unlikely to be resolved in the near term. Companies like SDL continue to “extend” the XLIFF standard, as they did with the TMX standard, by modifying the standard into a proprietary format that is not supported by other tools.

Claims will be made that the standard does not support their tools requirements. But the reality is these requirements can be supported within the extensions to XLIFF without breaking the actual standard format and the real reason for modifying or “enhancing” the standard is vendor lock-in, a familiar occurrence in the history of software.

There will be an increase in development activity of “Moses for Dummies” or “Do it yourself MT” type projects. These kits will try to dumb down the installation and will mostly be offered as open source. While this will allow for the installation of Moses to be streamlined, it will not resolve many critical technical or linguistic issues and most importantly will not resolve issues relative to data volume or quality. Without a robust linguistic skill-set, knowledge of Natural Language Programming (NLP) techniques and vast volumes of data, this is still a daunting challenge. Unfortunately most users will not have such skills, and through attempting this approach will learn a time consuming and often costly lesson. If high quality machine translation was as easy as getting the install process for open source solutions right, these tools would have been built long ago and many companies would already be using them and offering show cases of their high quality output.

These attempts may result in organizations turning to commercial machine translation providers. If a company is willing to invest in trying to build their own machine translation platform, they most likely have a real business need. If these companies fail in using open source machine translation software, the need may be filled by commercial machine translation providers once the experimentation phase with open source machine translation ends, with a portion of the work of data gathering already complete and a customer who understands technical aspects to some degree.

Impact on Language Tools

Expect to see tool vendors like SDL and Kilgray updating their commercial products to support Google’s Translate API V2 and adding features around purchasing, cost management and integration of Google Checkout functionality.

Users of these products will most likely have to get their own access key from Google and will need to set themselves up for Google Checkout. It would be reasonable to assume that Google will update the Translate API V2 to include a purchase feature so that applications that embed Google Translate can integrate the purchase process directly into their workflow and processes.

But updating language tools to support billing is not the end of the story. Current processes, such as the 2 examples below, will need to be updated:

- Pre-translating the entire document: A translation memory should always be used to match against previously translated material.

- Mixed source reviews: Some systems provide the ability to show the translation memory output and the machine translation output beside each other.

Both these processes, while useful, can become very expensive if system processes are not updated and such translations occur automatically without authorization of the user. Software updates to manage these processes more effectively will be important.

As more companies try to leverage open source machine translation technologies, vendors such as NoBabel and Terminotix that provide tools that extract, format, clean and convert data into translation memories may see an increase in their business.

Impact on Language Service Providers (LSPs)

LSPs who use software from SDL, Kilgray, Across and other tools that integrated with the Google API should be prepared to update their software and learn new processes to ensure that they do not get billed inadvertently for machine translation that was sent to Google without consideration for costs. If the software is not updated, the Google Translate function will simply stop working on December 1, 2011 when Google terminates the Google Translate V1.0 API.

LSPs may still use the Google Translator Toolkit or copy and paste content into the Google Translate web page, but should be very aware of the relevant privacy and data security issues.

Overall, there should be little impact to LSPs, with the exception of those who were using Google Translate behind the scenes or offering machine translation to customers using Google as the back-end. While this was a breach of Google’s Terms of Service, I believe a a number of LSPs were doing this.

Due to insufficient and unclear warning or in some cases no warnings at all, it is understandable that some may not have been aware of or have not fully understood Google’s and other machine translation service providers’ Terms of Service. However, with the Google Translate V2 API, users of the service must expressly sign up and agree to the terms. It will no longer be possible reasonably to claim ignorance of the terms or of the associated risks for customer data when it is submitted to the API.

LSPs are focused on delivering quality services with the higher-end skills required for translating and localizing content for a target market and then ultimately publishing to a market. Without a doubt, machine translation has a significant role in the future of translation, in particular for accelerating production and giving LSPs access to new markets of mass translation. But high quality translation systems that meet the needs of LSPs and ultimately their customers will require customized translation engines that are focused on a much narrower domain of knowledge than Google’s engines and are ultimately combined with both a human post editing effort and a human feedback cycle that continually improves the engine by giving high-quality human driven input back to the engine. It is a built for purpose machine and human collaboration that will ultimately deliver to the end customer’s needs, not just machine translation alone.

Impact on Corpus Providers

Industry organizations such as TAUS may gain some traction from the short term increase in demand for data while companies experiment with open source machine translation. However, as research has clearly shown, data quality from a variety of sources can actually reduce machine translation quality.

This analysis is continued in Part II

Comments

Post a Comment